LoRA

This interface provides the functionality to fine-tune Large Language Models (LLMs) using LoRA (Low-Rank Adaptation). It integrates the Unsloth open-source project and offers a user-friendly workflow. Here's an overview of the main features:

Key Features:

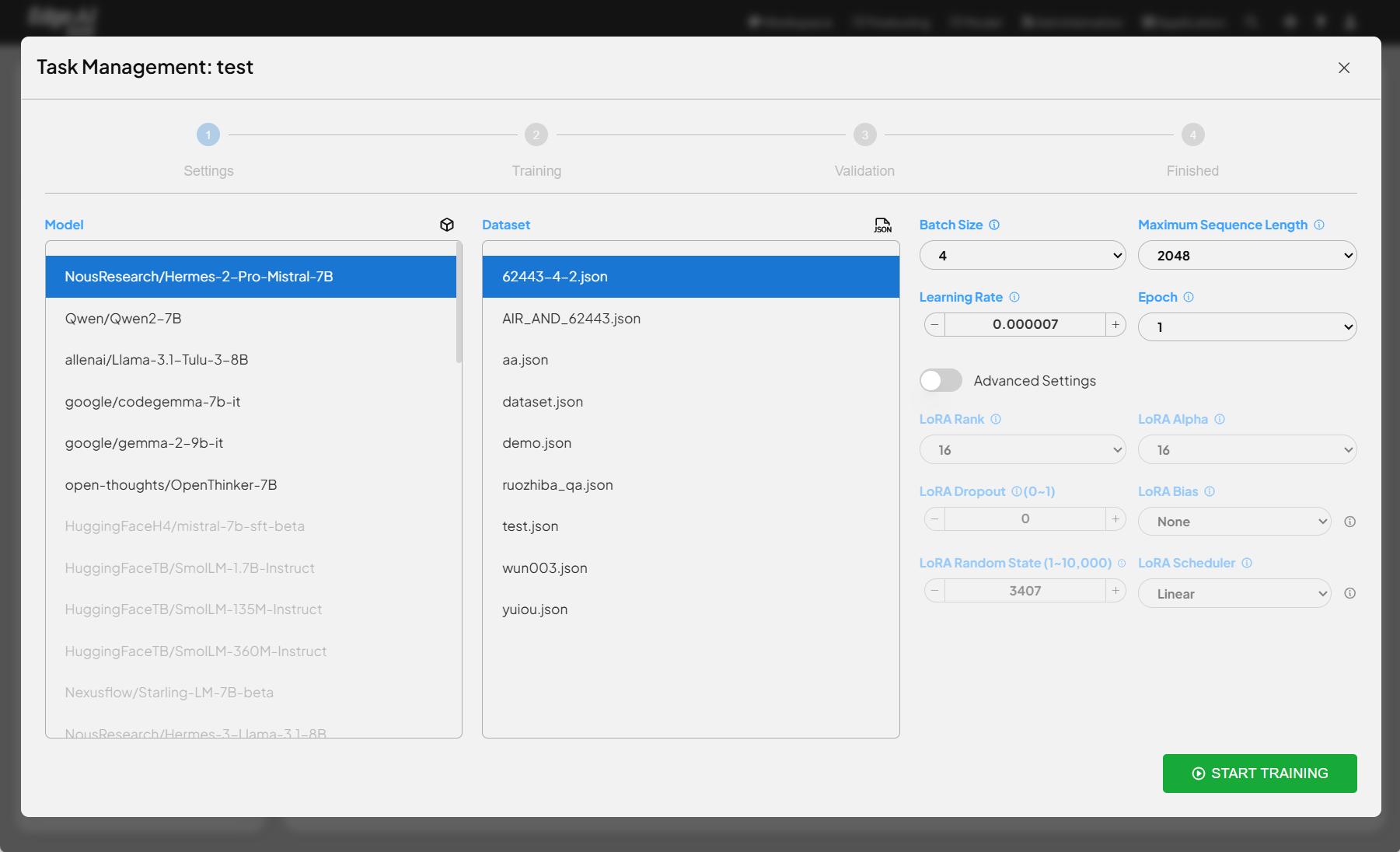

- Task Management: Users can manage multiple fine-tuning tasks.

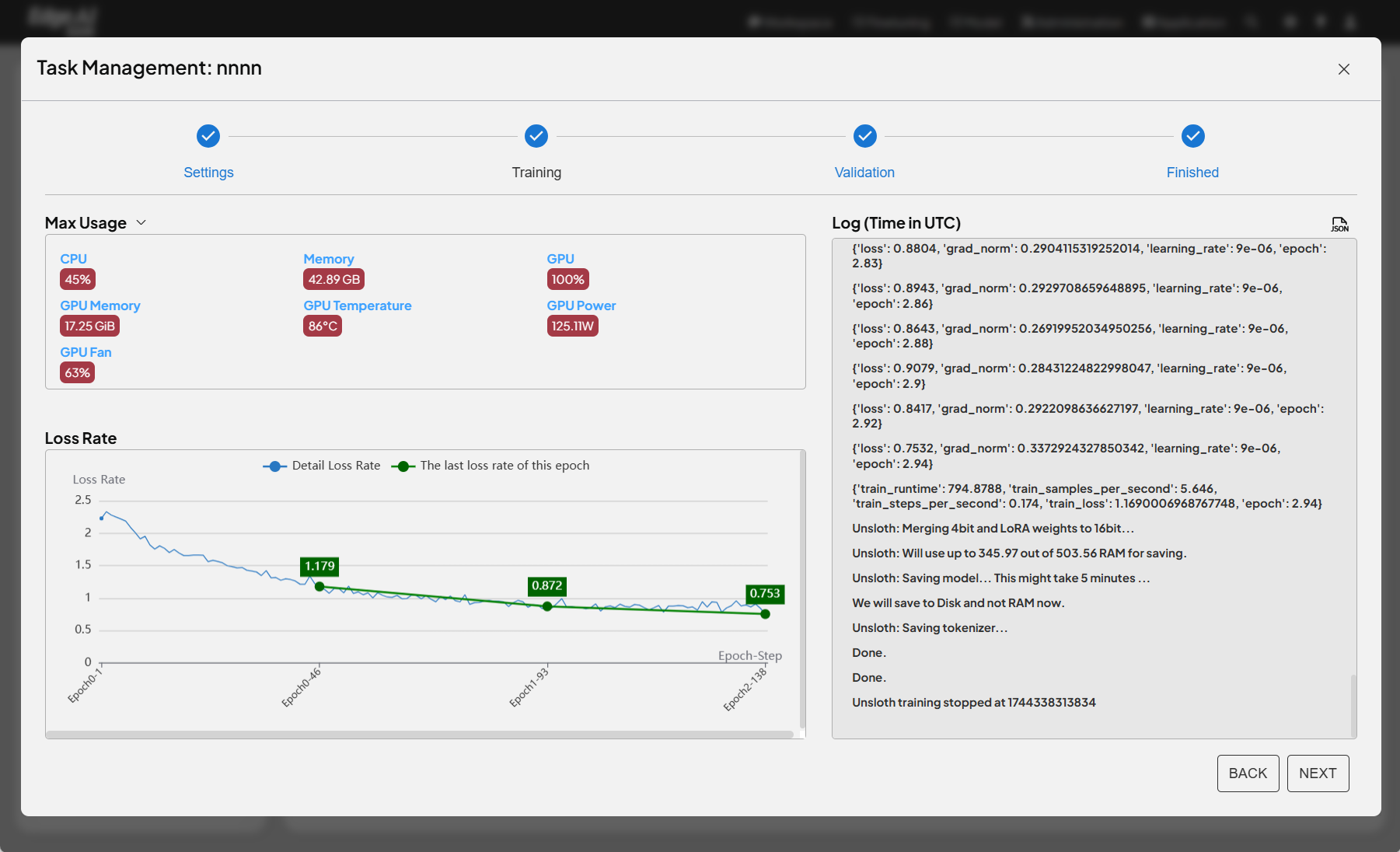

- Step-by-step Process: The interface provides a clear step-by-step process, including settings, training, validation, and completion, to guide users through the fine-tuning procedure.

- Model Selection: Users can select the LLM to be fine-tuned from a drop-down menu.

- Dataset Selection: Users can choose the dataset for fine-tuning.

- Training Parameter Configuration: Users can configure various training parameters, such as batch size, maximum sequence length, learning rate, and training epochs.

- LoRA Configuration: Users can configure LoRA-related parameters, such as rank, alpha value, and other advanced settings like dropout, bias, and scheduler.

- Start Training: Users can initiate the fine-tuning process by clicking a button.

- LoRA Fine-tuning: This feature uses LoRA to efficiently fine-tune LLMs. LoRA is a highly efficient fine-tuning technique that freezes most of the pre-trained model's parameters and trains only a small number of additional parameters. This significantly reduces computational and memory requirements while achieving performance comparable to full parameter fine-tuning.

- Unsloth Integration: The function integrates Unsloth, an open-source library for efficient LLM fine-tuning. Unsloth optimizes the implementation of LoRA, making it faster and more memory-efficient.

- Similarity to Full Parameter Fine-tuning: The fine-tuning process using LoRA is similar to full parameter fine-tuning, with the key difference being the parameters that are trained. With LoRA, only the additional LoRA parameters are updated, while the pre-trained model's parameters remain unchanged.