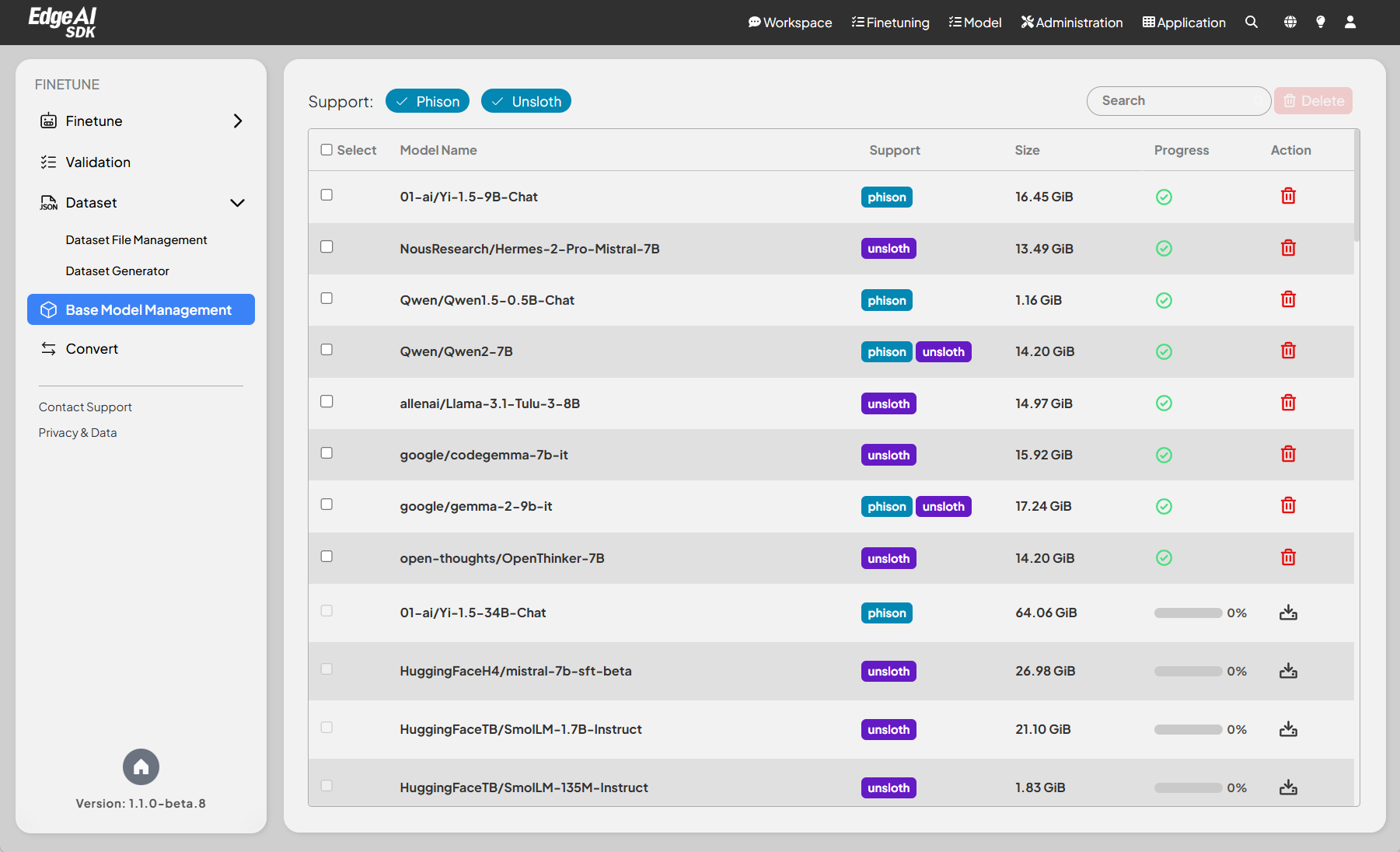

Model Management

The Model Management page allows users to view and manage a list of supported models for fine-tuning or inference purposes. Models can be downloaded directly from Hugging Face repositories. Before using the feature, ensure that your device is connected to the internet, as model downloads require access to external servers.

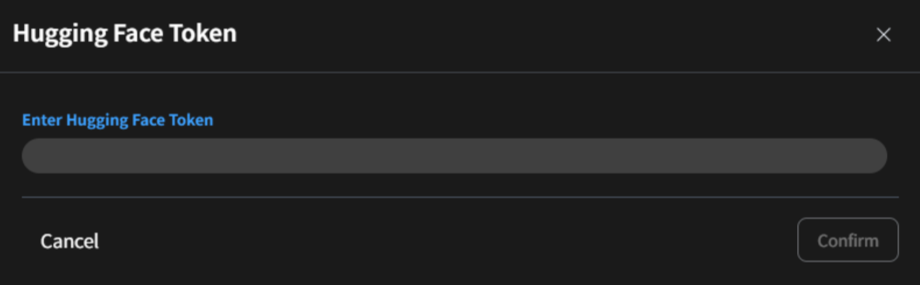

Additionally, on the first download attempt, users will be prompted to provide an Access Token from Hugging Face. This token can be obtained by following Hugging Face's official guidelines for token generation.

Features

- Model List:

- Displays all available models, including their names, sizes, and current processing status.

- Users can search for specific models using the search bar.

- Model Details:

- Model Name: Specifies the name of the model (e.g.,

meta-llama/Llama-3.1-8B-Instruct). - Support: Use the

Phisontag for full parameters andUnslothfor the LoRA mechanism. A model tagged with both supports two different LLM fine-tuning approaches. - Size: Shows the storage size of each model (e.g., 29.93 GiB, 262.86 GiB).

- Process Status: Indicates whether the model is ready to use (✓ for ready).

- Action: Allows users to manage the model (e.g., download, delete).

- Model Name: Specifies the name of the model (e.g.,

- Download Process:

- Displays the download progress for models that are currently being fetched.

- Provides a clear indication of the model size and estimated time remaining.

- Supports

pauseandresumefunctionality for interrupted downloads.

- Access Token Requirement:

- During the initial download, users are required to provide a Hugging Face

Access Tokenfor authentication.

- For token generation, visit the Hugging Face website and follow the official instructions to create and retrieve your personal token.

- During the initial download, users are required to provide a Hugging Face

Supported Models

- v1.2.0

- v1.1.2

- v1.0.0

- Full Parameters (Phison Supported)

| Model Family | Model |

|---|---|

| Deepseek-ai | DeepSeek-R1-Distill-Llama-70B |

| DeepSeek-R1-Distill-Qwen-32B | |

| deepseek-llm-7b-chat | |

| gemma-3-27b-it | |

| gemma-3-1b-it | |

| gemma-2-9b-it | |

| Meta-llama | Llama-3.3-70B-Instruct |

| Llama-3.2-3B-Instruct | |

| Llama-3.1-405B-Instruct | |

| Llama-3.1-8B-Instruct | |

| Llama-3-Taiwan-70B-Instruct | |

| Microsoft | Phi-4-reasoning |

| phi-4 | |

| Phi-3.5-mini-instruct | |

| Mistral | Mistral-Small-3.1-24B-Instruct-2503 |

| Mixtral-8x22B-Instruct-v0.1 | |

| Mixtral-8x7B-Instruct-v0.1 | |

| Qwen | Qwen3-32B |

| Qwen3-0.6B | |

| QwQ-32B | |

| Qwen2.5-72B-Instruct | |

| Qwen2-7B |

- LoRA (Unsloth Supported)

| Model Family | Model |

|---|---|

| Deepseek-ai | DeepSeek-R1-Distill-Llama-8B |

| DeepSeek-R1-Distill-Qwen-14B | |

| DeepSeek-R1-Distill-Qwen-1.5B | |

| DeepSeek-R1-Distill-Qwen-7B | |

| gemma-3-1b-it | |

| gemma-3-4b-it | |

| gemma-3-12b-it | |

| Meta-llama | Llama-3.1-8B-Instruct |

| Llama-3.2-1B-Instruct | |

| Llama-3.2-3B-Instruct | |

| Mistralai | Mistral-7B-Instruct-v0.3 |

| Mistral-Nemo-Instruct-2407 | |

| Qwen | Qwen3-0.6B |

| Qwen3-0.6B-Base | |

| Qwen3-1.7B | |

| Qwen3-1.7B-Base | |

| Qwen3-4B | |

| Qwen3-4B-Base | |

| Qwen3-8B | |

| Qwen3-8B-Base | |

| Qwen3-14B | |

| Qwen3-14B-Base | |

| Microsoft | Phi-3-mini-4k-instruct |

| Phi-3-medium-4k-instruct | |

| IBM-granite | granite-3.2-2b-instruct |

| granite-3.2-8b-instruct |

| ModelName | Support | Size |

|---|---|---|

| google/gemma-2b-it | unsloth | 14.03 GiB |

| meta-llama/Llama-3.1-8B-Instruct | phison, unsloth | 29.93 GiB |

| 01-ai/Yi-1.5-34B-Chat | phison | 64.06 GiB |

| 01-ai/Yi-1.5-9B-Chat | phison | 16.45 GiB |

| HuggingFaceH4/mistral-7b-sft-beta | unsloth | 26.98 GiB |

| HuggingFaceTB/SmolLM-1.7B-Instruct | unsloth | 21.10 GiB |

| HuggingFaceTB/SmolLM-135M-Instruct | unsloth | 1.83 GiB |

| HuggingFaceTB/SmolLM-360M-Instruct | unsloth | 4.67 GiB |

| MediaTek-Research/Breeze-7B-Instruct-v0_1 | phison | 27.90 GiB |

| Nexusflow/Starling-LM-7B-beta | unsloth | 13.49 GiB |

| NousResearch/Hermes-2-Pro-Mistral-7B | unsloth | 13.49 GiB |

| NousResearch/Hermes-3-Llama-3.1-8B | unsloth | 14.97 GiB |

| Qwen/Qwen1.5-0.5B-Chat | phison | 1.16 GiB |

| Qwen/Qwen1.5-1.8B-Chat | phison | 3.43 GiB |

| Qwen/Qwen1.5-110B-Chat | phison | 207.16 GiB |

| Qwen/Qwen1.5-14B-Chat | phison | 26.40 GiB |

| Qwen/Qwen1.5-4B-Chat | phison | 7.37 GiB |

| Qwen/Qwen1.5-72B-Chat | phison | 134.66 GiB |

| Qwen/Qwen1.5-7B-Chat | phison | 14.39 GiB |

| Qwen/Qwen2-0.5B | unsloth | 953.29 MiB |

| Qwen/Qwen2-0.5B-Instruct | unsloth | 953.29 MiB |

| Qwen/Qwen2-1.5B | unsloth | 2.89 GiB |

| Qwen/Qwen2-1.5B-Instruct | unsloth | 2.89 GiB |

| Qwen/Qwen2-72B | phison | 135.44 GiB |

| Qwen/Qwen2-72B-Instruct | phison | 135.44 GiB |

| Qwen/Qwen2-7B | phison, unsloth | 14.20 GiB |

| Qwen/Qwen2-7B-Instruct | unsloth | 14.20 GiB |

| Qwen/Qwen2.5-0.5B-Instruct | unsloth | 953.30 MiB |

| Qwen/Qwen2.5-1.5B-Instruct | unsloth | 2.89 GiB |

| Qwen/Qwen2.5-3B-Instruct | unsloth | 5.76 GiB |

| Qwen/Qwen2.5-72B-Instruct | phison | 135.44 GiB |

| Qwen/Qwen2.5-7B-Instruct | unsloth | 14.20 GiB |

| TinyLlama/TinyLlama-1.1B-Chat-v1.0 | unsloth | 2.05 GiB |

| akjindal53244/Llama-3.1-Storm-8B | unsloth | 14.97 GiB |

| allenai/Llama-3.1-Tulu-3-8B | unsloth | 14.97 GiB |

| deepseek-ai/DeepSeek-Coder-V2-Instruct | phison | 439.11 GiB |

| deepseek-ai/DeepSeek-R1-Distill-Llama-8B | unsloth | 14.97 GiB |

| deepseek-ai/DeepSeek-R1-Distill-Qwen-1.5B | unsloth | 3.32 GiB |

| deepseek-ai/DeepSeek-R1-Distill-Qwen-7B | unsloth | 14.19 GiB |

| deepseek-ai/deepseek-llm-7b-chat | phison | 12.88 GiB |

| google/codegemma-7b-it | unsloth | 15.92 GiB |

| google/gemma-1.1-2b-it | unsloth | 4.69 GiB |

| google/gemma-1.1-7b-it | unsloth | 15.92 GiB |

| google/gemma-2-27b-it | phison | 50.74 GiB |

| google/gemma-2-9b-it | phison | 17.24 GiB |

| google/gemma-7b-it | unsloth | 47.74 GiB |

| meta-llama/Llama-2-7b-chat-hf | unsloth | 25.10 GiB |

| meta-llama/Llama-3.1-405B-Instruct | phison | 2.22 TiB |

| meta-llama/Llama-3.1-70B-Instruct | phison | 262.86 GiB |

| meta-llama/Llama-3.2-3B-Instruct | unsloth | 11.98 GiB |

| meta-llama/LlamaGuard-7b | phison | 25.11 GiB |

| meta-llama/Meta-Llama-3-70B-Instruct | phison | 262.86 GiB |

| meta-llama/Meta-Llama-3-8B-Instruct | phison, unsloth | 29.93 GiB |

| mistralai/Mistral-7B-Instruct-v0.1 | unsloth | 27.47 GiB |

| mistralai/Mistral-7B-Instruct-v0.2 | unsloth | 27.47 GiB |

| mistralai/Mistral-7B-Instruct-v0.3 | unsloth | 27.00 GiB |

| mistralai/Mixtral-8x22B-Instruct-v0.1 | phison | 261.95 GiB |

| mistralai/Mixtral-8x7B-Instruct-v0.1 | phison | 177.40 GiB |

| teknium/OpenHermes-2.5-Mistral-7B | unsloth | 26.98 GiB |

| yentinglin/Llama-3-Taiwan-70B-Instruct | phison | 31.43 GiB |

| ModelName | Size |

|---|---|

| google/gemma-2-9b-it | 18.51 GiB |

| meta-llama/Llama-3.1-8B-Instruct | 29.93 GiB |

| meta-llama/Llama-3.1-70B-Instruct | 262.91 GiB |

| meta-llama/Llama-3.1-405B-Instruct | 2.22 TiB |

| meta-llama/Llama-2-7b-chat-hf | 25.11 GiB |

| meta-llama/Llama-2-13b-chat-hf | 48.48 GiB |

| meta-llama/Llama-2-70b-chat-hf | 256.96 GiB |

| meta-llama/Meta-Llama-3-8B-Instruct | 29.93 GiB |

| meta-llama/Meta-Llama-3-70B-Instruct | 262.91 GiB |

| yentinglin/Llama-3-Taiwan-70B-Instruct | 131.45 GiB |

| meta-llama/LlamaGuard-7b | 25.11 GiB |

| codellama/CodeLlama-7b-Instruct-hf | 25.11 GiB |

| codellama/CodeLlama-13b-Instruct-hf | 48.79 GiB |

| codellama/CodeLlama-70b-Instruct-hf | 257.09 GiB |

| mistralai/Mixtral-8x7B-Instruct-v0.1 | 177.39 GiB |

| mistralai/Mixtral-8x22B-Instruct-v0.1 | 261.99 GiB |

| taide/TAIDE-LX-7B-Chat | 12.92 GiB |

| MediaTek-Research/Breeze-7B-Instruct-v1_0 | 13.95 GiB |

| MediaTek-Research/Breeze-7B-32k-Instruct-v1_0 | 27.92 GiB |

| tiiuae/falcon-180B-chat | 334.41 GiB |

| mistralai/Mistral-7B-Instruct-v0.3 | 27.01 GiB |

| Qwen/Qwen2-7B | 14.2 GiB |

| Qwen/Qwen2-7B-Instruct | 14.2 GiB |

| Qwen/Qwen2-72B | 135.44 GiB |

| Qwen/Qwen2-72B-Instruct | 135.44 GiB |

| Qwen/Qwen1.5-0.5B-Chat | 1.16 GiB |

| Qwen/Qwen1.5-1.8B-Chat | 3.43 GiB |

| Qwen/Qwen1.5-4B-Chat | 7.37 GiB |

| Qwen/Qwen1.5-7B-Chat | 14.39 GiB |

| Qwen/Qwen1.5-14B-Chat | 26.41 GiB |

| Qwen/Qwen1.5-72B-Chat | 134.68 GiB |

| Qwen/Qwen1.5-110B-Chat | 207.14 GiB |

| deepseek-ai/deepseek-llm-7b-chat | 12.88 GiB |

| deepseek-ai/deepseek-llm-67b-chat | 125.56 GiB |

| deepseek-ai/deepseek-moe-16b-chat | 30.5 GiB |

| 01-ai/Yi-1.5-6B-Chat | 11.3 GiB |

| 01-ai/Yi-1.5-9B-Chat | 16.45 GiB |

| 01-ai/Yi-1.5-34B-Chat | 4.07 GiB |

Steps for Access Token

- Navigate to the Hugging Face website: https://huggingface.co.

- Log in to your Hugging Face account.

- Go to your account settings and select the Access Tokens tab.

- Generate a new token with the required permissions.

- Copy the token and provide it when prompted during the model download process in the application.

For more details, refer to the Hugging Face documentation.

warning

Some models, such as LLAMA, require user consent for sharing contact information before download.