Utilities

Introduce what utilities (CLIs) GenAI Studio provides and how to use them.

Introduction

GenAI Studio is an AI platform based on Ubuntu Linux. We provide several utilities in command line interface (CLI) to manage GenAI Studio with ease. This document gives you all the information you need to get familiar with them.

Prior to going ahead, you need to know that all utilities are populated inside bin folder under it's installation

directory. In addition, when you encounter something like app-ps: command not found problem inside bin directory,

just adding ./ so that shell can find the right one for you. For example, ./app-up.

Service Management Utilities

The first part of utilities to introduce are service management utilities. They are named with prefix app-.

Most of them accept an extra argument, a specific service name, so that they show information only for that service.

The service names you can provide are listed below:

- autoheal

- dcgm-exporter

- flowise

- ftp

- grafana

- node-exporter

- ollama

- postgresql

- prometheus

- qdrant

- server

autoheal, dcgm-exporter, flowise, ftp, grafana, node-exporter, and prometheus are available since v1.1.0

release.

Do not do any actions to them, except server service, unless you know what you are doing.

app-up

This utility starts all services up. If what you want is just to start a single service, give that service name as the argument. Examples are listed below.

./app-upstarts all services../app-up serverstarts GenAI Studio server as well as all its dependent services.

app-down

This utility is the counterpart of app-up. It shuts all services down. Also, it can shut a single service down if

you provide a specific service name as the argument.

app-logs

This utility provides an easy way to show log messages of all services. Normally, it's recommended to give a service

name as the argument so that it shows logs only for that specific service. Furthermore, an extra option -f indicates

the output will follow recent messages, while option -t shows timestamps that messages are logged.

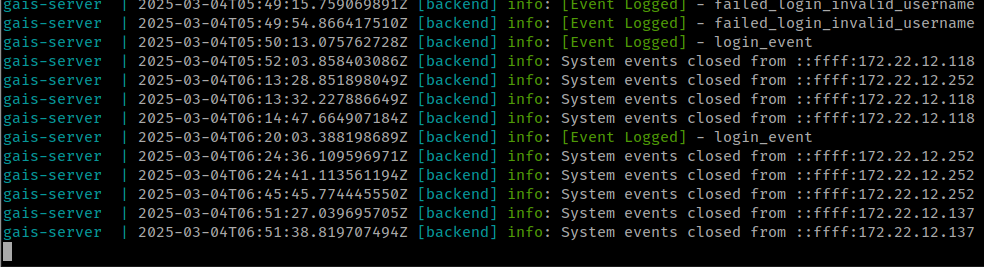

The image below was captured by issuing command ./app-logs server -ft.

app-ps

This utility shows users what services are running currently. We seldom provide a service name like other utilities, though we can do so.

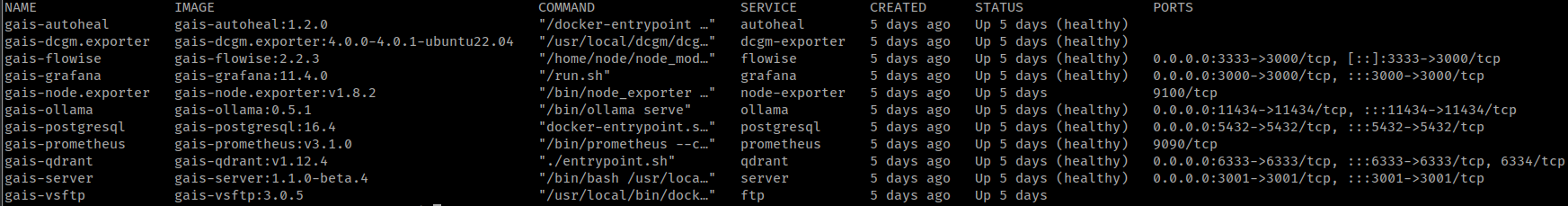

The next image shows the result of ./app-ps.

app-restart

This utility restarts services. It's not recommended to use this one since services may require whole reloading,

use app-down and app-up in order instead.

app-version

This utility simply shows GenAI Studio version. This is the only utility that you don't need, and can't be provided, a service name as an extra argument.

app-version is available since v1.1.0 release.

Model Management Utilities

No matter what you want to do on GenAI Studio platform, the model will always be the one of you are most interested in, or care about, with no doubt. There are two kinds of model that GenAI Studio handles: training models and inference models as well. The former one is not ready yet (it will be in the near future), while the latter one is just out there.

ollama-model

This command-line tool allows system administrators to easily manage models available to the Ollama inference server in GenAI Studio. Through this tool, administrators can:

- Check which models are currently available for Ollama in GenAI Studio.

- Export models from Ollama in GenAI Studio as model files that can be used by GenAI Studio later.

- Import models from the Ollama online registry or from model files created by GenAI Studio.

- Remove unused models from Ollama in GenAI Studio.

ollama-model is available since version v1.1.0.

Case 1: List available models in GenAI Studio's Ollama

To check which models are currently available for Ollama in GenAI Studio, use the list subcommand to list all models.

ollama-model list --from-builtin

Case 2: Export a model from GenAI Studio's Ollama as a GenAI Studio model file

Use the export subcommand to export the phi4 model from Ollama in GenAI Studio as a GenAI Studio model file.

ollama-model export phi4 --from-builtin

After execution, a model file named phi4-latest.model will be generated in the current directory.

Case 3: Import a model from a GenAI Studio-created model file

Use the import subcommand to import the phi4-latest.model model file from the previous example into

Ollama in GenAI Studio.

ollama-model import phi4-latest.model

Case 4: Import a model from the Ollama online registry into GenAI Studio's Ollama

The following command can directly import gpt-oss from the Ollama online registry into Ollama in GenAI Studio.

ollama-model import gpt-oss --from-registry

Case 5: Delete a model from GenAI Studio's Ollama

To delete the model gpt-oss from Ollama in GenAI Studio, use the remove subcommand.

ollama-model remove gpt-oss --from-builtin

In addition to the basic usage described above, ollama-model also allows administrators to perform limited

operations with Ollama outside GenAI Studio. Run ollama-model --help to get complete usage information and

related details.

MCP (Model Context Protocol) Server Management Utilities

Model Context Protocol, abbreviated as MCP. It is a system that provides context, tools, and prompts for AI clients. It can expose data sources such as documents, files, databases, and API integrations, enabling AI assistants to access real-time information in a secure manner. GenAI Studio has supported MCP server integration starting from version 1.2.0, and also pre-loads the following commands to support MCP server operations:

npxuvoruvxnodebash

mcp-server

GenAI Studio provides the mcp-server command-line tool that allows administrators to seamlessly integrate the tools

users need with GenAI Studio. Through mcp-server, administrators can:

- List the current MCP servers integrated with GenAI Studio.

- Import MCP servers into GenAI Studio.

- Remove MCP servers from GenAI Studio.

The following examples provide simple explanations.

Case 1: Importing an MCP Server into GenAI Studio

The import subcommand can import an MCP server into GenAI Studio, as shown below.

mcp-server import --source SRC_PATH --config CONFIG_FILE

Here, SRC_PATH is the runtime environment archive (.zip) of the MCP server, and CONFIG_FILE is the configuration

file corresponding to this MCP server. Below shows the content of a basic MCP server configuration.

{

"My MCP Server": {

"command": "npx",

"args": [

"tsx",

"%%My MCP Server%%/index.ts"

],

"env": {

"GRAFANA_URL": "http://grafana:3000",

"GRAFANA_API_KEY": "glsa_aIude91214fryVZ6tIVFYczXpemKm8sFmdXatem3_"

}

}

}

-

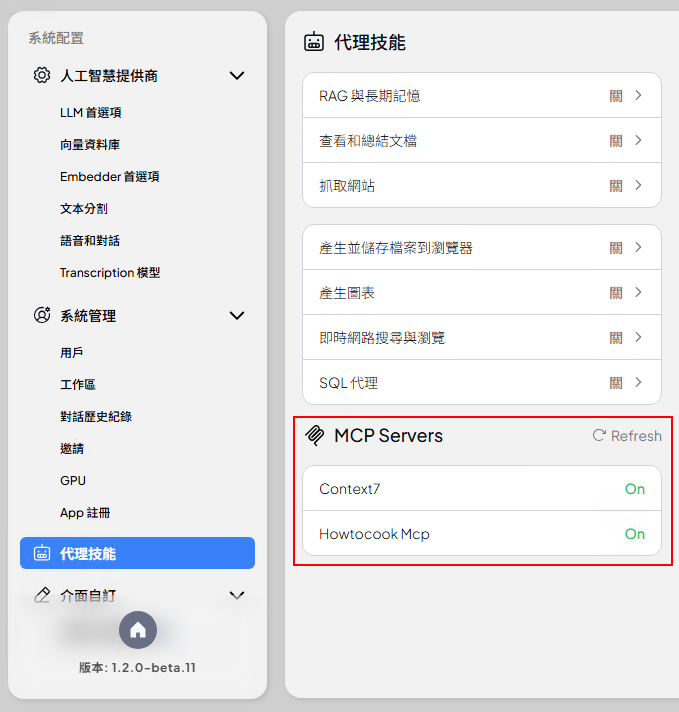

My MCP Serveron line 2 is the name of the MCP server to be imported, which will be displayed in the list under System Configurations > Agent Skills > MCP Servers and must be unique (as shown in the image below).

-

%%My MCP Server%%on line 6 will be replaced with the actual directory where the MCP server is located during import. TheMy MCP Serverhere must match the name on line 2.

Case 2: Listing MCP Servers Currently Integrated with GenAI Studio

Using the list or ls subcommand can list the names of MCP servers currently integrated with GenAI Studio.

mcp-server list

Case 3: Removing an MCP Server from GenAI Studio

The remove subcommand can directly remove an MCP server that has been integrated into GenAI Studio.

mcp-server remove "My MCP Server"

Since My MCP Server contains whitespace characters, not enclosing it in double quotes will cause mcp-server

execution errors.