Version 1.1

GenAI Studio version 1.1 series has been released:

- Initial version v1.1.0 was released on 2025/04/22.

- Patch version v1.1.1 was released on 2025/04/23.

- Last patch version v1.1.2 was released on 2025/05/06.

✨ What's New?

🔧 Revamped Management Interface & Optimized Architecture

- Entirely refactored GenAI Studio management interface

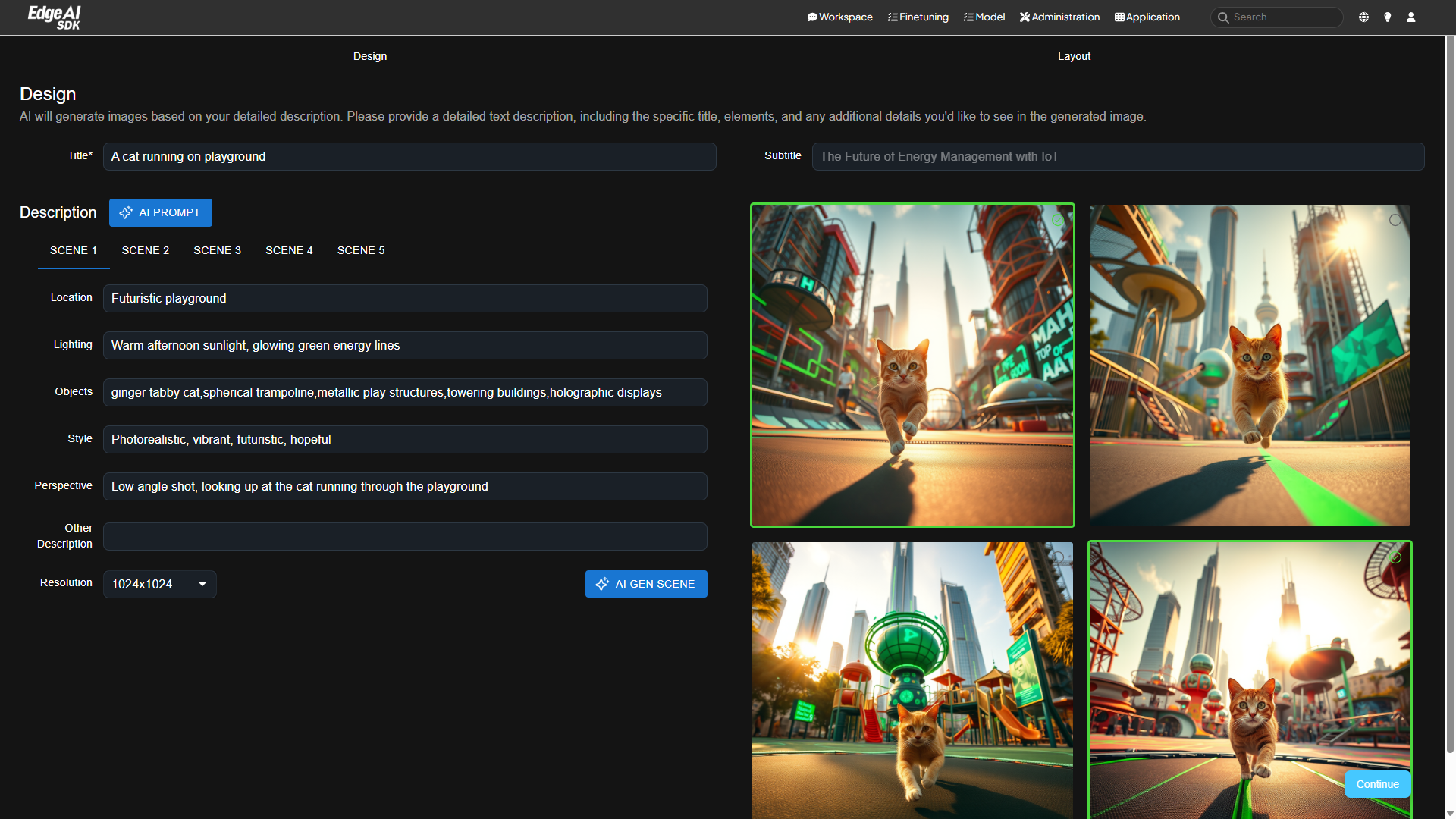

- Enhanced architecture to better support Inference Apps such as Flux.1 Schnell (

Text to Image) and ScrapeGraphAI

- Easier management and streamlined deployment of diverse GenAI applications

🔄 RAGOps Auto-Sync

- Automatic document synchronization from designated folders directly to your vector databases

- Significantly improved RAG (Retrieval-Augmented Generation) workflow efficiency

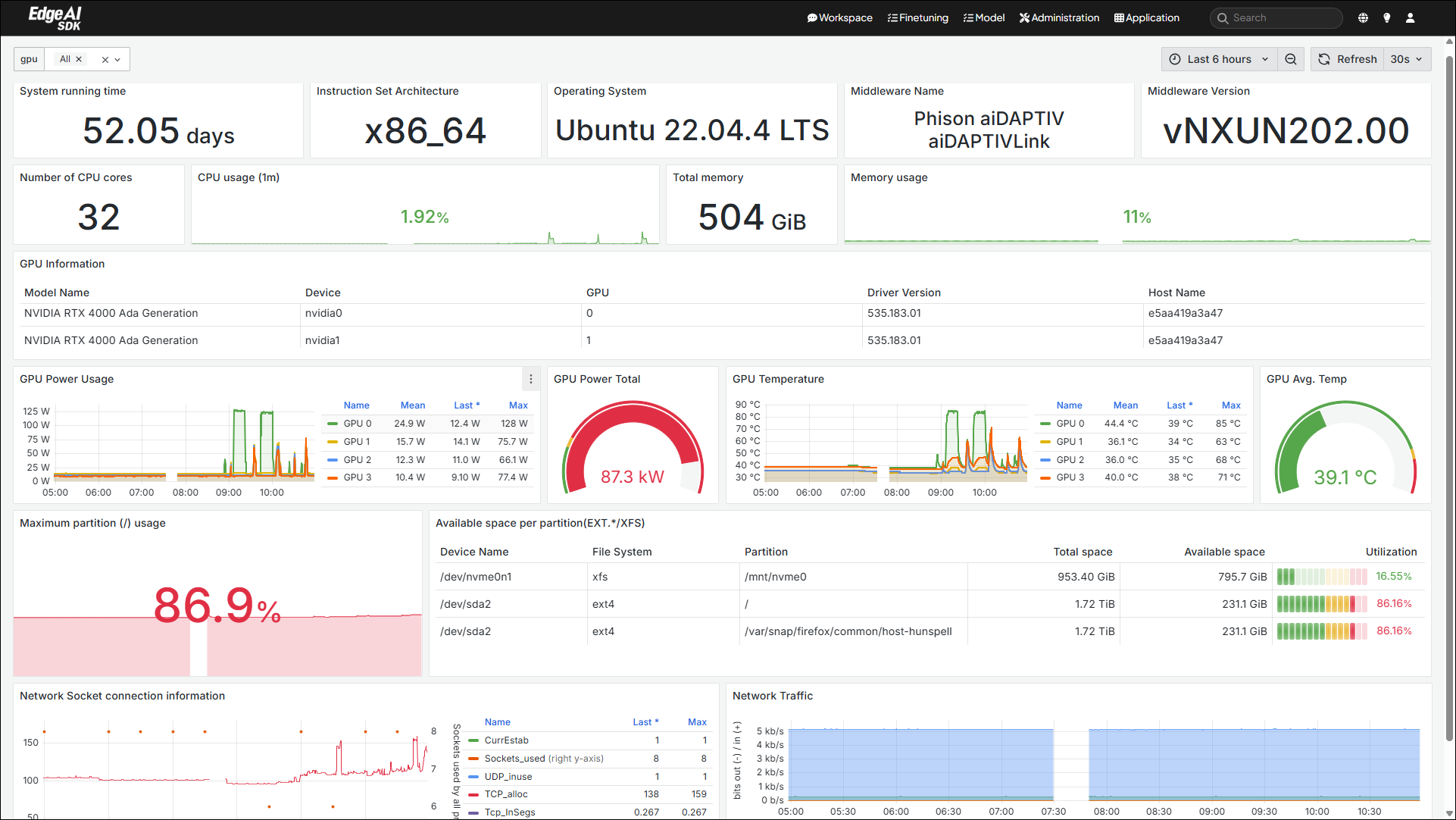

📊 Real-time System Monitoring

- Integrated Grafana and Prometheus for real-time system performance tracking

- Proactively detect and address potential issues before they escalate

🚀 Model Conversion & Inference Runtime

- Model conversion functionality has been added, along with the availability of the EdgeAI SDK for inference-side

- downloads and deployment. This makes it easier to deploy models to edge devices.

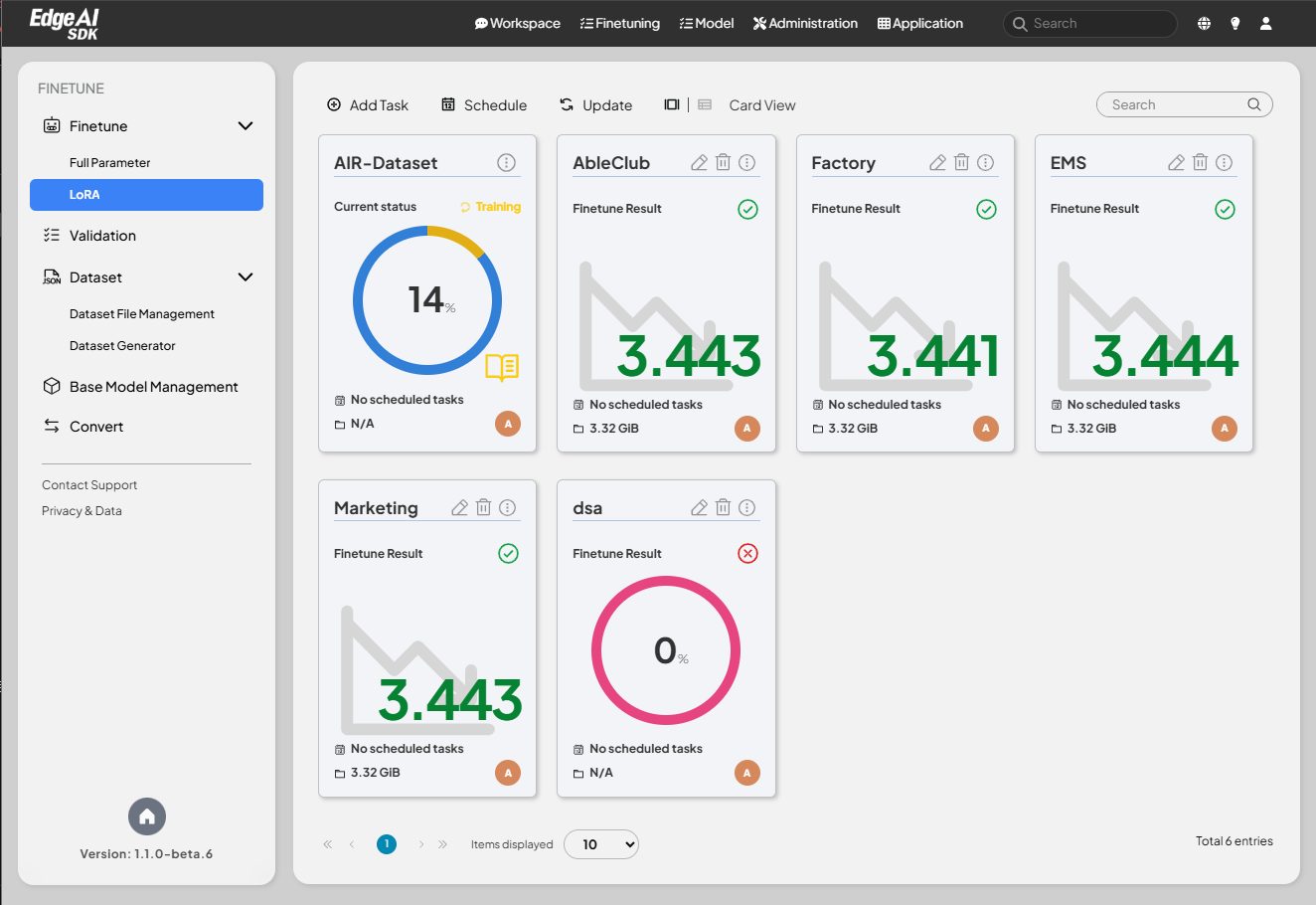

🎯 Enhanced Fine-Tuning with LORA

- Integrated LORA (Low-Rank Adaptation) fine-tuning support via Unsloth

- Compatible with inference models like DeepSeek for

precise, customized adjustments

⚙️ Upgrades & Maintenance

- AnythingLLM updated to v1.7.5, providing the latest features and improved security.

- Phison Firmware upgraded to NXUN202.00, enhancing hardware performance and stability.

Third-Party Updates

GenAI Studio utilizes the following components:

- node-exporter (1.8.2)

Exposes desired host metrics to Prometheus. - dcgm-exporter (4.0.0-4.0.1-ubuntu22.04)

Exposes host GPU metrics to Prometheus. - Prometheus (3.1.0)

Collects desired metrics as a data source for Grafana. - Grafana (11.4.0)

Serves as the resource monitoring dashboard. - Phison aiDAPTIVLink (NXUN202.00)

Leverages middleware for model fine-tuning with full parameters. - Ollama (0.6.2)

Acts as the inference server. - llama.cpp (full-cuda-b4897)

Converts GGUF model file formats. - vsFTP (3.0.5)

Provides model files for downloading. - Qdrant (1.12.4)

Functions as the vector database. - Flowise (2.2.7-patch.1)

Automates RAGOps functionality and workflows. - PostgreSQL (16.4)

Serves as the relational database. - Unsloth (2025.3.18)

Performs model fine-tuning with LoRA mode.